|

目前我有几个站大概十几万ip,pv三四百万左右,之前是分成好几台iis服务器,由于网站大部分是生成的静态页面,考虑到nginx对静态页面的优秀的表现,所有就进行了更换,把前后台分离,后台用一台服务器安装server 2008 ii7,前台单独用一台 Dual Xeon E5410 16GB CentOS 6 x86_64 Nginx

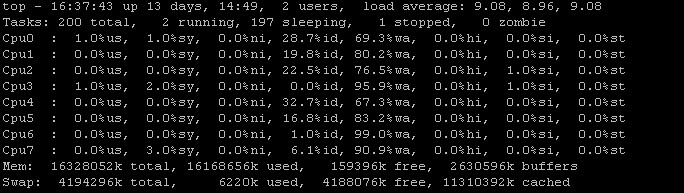

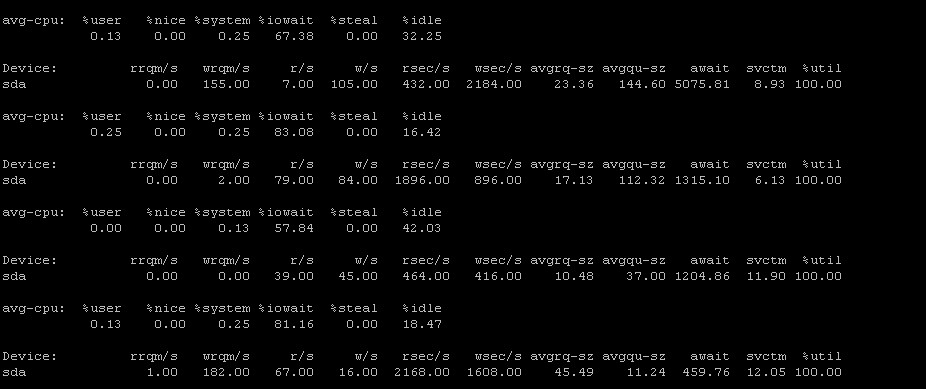

现在发现前台linux服务器到了高峰期的时候,几个网站打开速度都非常慢,查看服务器内存跟cpu均使用的非常少,但是io非常高

像目前这个情况,我在网上查了,有两种办法,一种是换ssd硬盘的服务器,

一种是把网站的一些经常访问的网页加载到内存中。

第一种,我查了,ssd的服务器基本没有,有也只是有些vps,配置都不咋滴。

第二种,不会弄啊,有什么其他的处理方法没?

我最大并发才1万左右,我就纳闷了,我看到很多人拿nginx测试,随随便便一个低端的服务器都可以抗起三四万并发,我才一万左右,而且服务器还是买的比较好的了,都抗不住。表示不解!!!

贴上我的配置文件:

user www www;

worker_processes 8;

#worker_cpu_affinity auto;

worker_cpu_affinity 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000;

worker_rlimit_nofile 65535;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

events {

use epoll;

worker_connections 20480;

}

http {

server_names_hash_bucket_size 512;

server_names_hash_max_size 512;

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

tcp_nopush on;

#keepalive_timeout 0;

keepalive_timeout 65;

tcp_nodelay on;

server_tokens off;

gzip on;

gzip_min_length 1k;

gzip_buffers 4 16k;

gzip_http_version 1.1;

gzip_comp_level 2;

gzip_types text/plain application/x-javascript text/css application/xml;

gzip_vary on;

include vhost/*.conf;

vhost 里面的网站的配置

server {

listen 80;

server_name www.xxx.com *.xxx.com;

location / {

root /home/wwwroot/xxx.com;

error_page 404 /404.html;

error_page 500 502 503 504 /50x.html;

}

}

兄弟,可以开 open_file_cache 。根据实际的调整:

## Set the OS file cache. open_file_cache max=3000 inactive=120s; open_file_cache_valid 45s; open_file_cache_min_uses 2; open_file_cache_errors off;

改完看看效果。

open_file_cache max=65535 inactive=120s;

open_file_cache_valid 45s;

open_file_cache_min_uses 2;

open_file_cache_errors off;

把这个添加进去了,好像没多大用!另外我还参照网上的一些修改了些内核设置:

net.ipv4.ip_forward = 0

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

kernel.sysrq = 0

kernel.core_uses_pid = 1

net.ipv4.tcp_syncookies = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.shmmax = 68719476736

kernel.shmall = 4294967296

net.ipv4.tcp_max_tw_buckets = 6000

net.ipv4.tcp_sack = 1

net.ipv4.tcp_window_scaling = 1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.core.wmem_default = 8388608

net.core.rmem_default = 8388608

net.core.rmem_max = 16777216

net.core.wmem_max = 16777216

net.core.netdev_max_backlog = 262144

net.core.somaxconn = 262144

net.ipv4.tcp_max_orphans = 3276800

net.ipv4.tcp_max_syn_backlog = 262144

net.ipv4.tcp_timestamps = 0

net.ipv4.tcp_synack_retries = 1

net.ipv4.tcp_syn_retries = 1

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_mem = 94500000 915000000 927000000

net.ipv4.tcp_fin_timeout = 1

net.ipv4.tcp_keepalive_time = 30

net.ipv4.ip_local_port_range = 1024 65000

外加,把线程加大到24个线程,然后

worker_cpu_affinity 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000 00000001 00000010 00000100 00001000 00010000 00100000 01000000 10000000;

原文地址:http://www.oschina.net/question/1465961_146523?sort=default&p=2#answers |